Since Elon Musk inherited Twitter, the platform has confronted ongoing controversy. Regardless of introducing new options like creator monetization, ad-free scrolling, paid posts, and early entry to GrokAI, bot accounts and faux information proceed to extend. Group notes have corrected falsified stories, however Musk has but to sort out GrokAI’s points.

Final yr, GrokAI emerged as ChatGPT’s competitor and has since obtained reward for its “rebellious persona” and willingness to answer questions different chatbots keep away from.

The time period “grok” was coined by Robert Heinlein, the creator of sci-fi novel Stranger in a Unusual Land. Whereas its that means is much extra elaborate in Heinlein’s work, the Oxford English Dictionary describes “grok” as “to empathize or talk sympathetically” and “to expertise enjoyment.”

Musk supposed for his chatbot to generate personalised solutions with a humorous twist – or, in different phrases, a chatbot with no filter. As of now, Grok is unique to Blue customers to incentivize Twitter’s subscriptions.

Testers claimed that Grok presents itself as a user-friendly chatbot with customizable templates, collaboration options, and superior pure language processes for content material creation. As well as, Grok analyzes statistics and info for companies staying on prime of stories and tendencies. Nevertheless, the chatbot’s “rebellious” nature is producing AI hallucinations and simply plain improper headlines.

Press the search button 🔍 to see real-time custom-made information for you created by Grok AI

— Elon Musk (@elonmusk) April 5, 2024

Musk inspired customers to make use of Grok to see “real-time custom-made information,” however the outcomes had been removed from correct.

Shortly after, on April 4th, Grok acknowledged that Iran struck Tel Aviv with missiles, sparking criticism of the chatbot’s legitimacy after Israel admitted to bombing Iran’s embassy in Syria three days earlier. It’s necessary to notice that Grok generated this headline lengthy earlier than Iran’s April fifteenth assault.

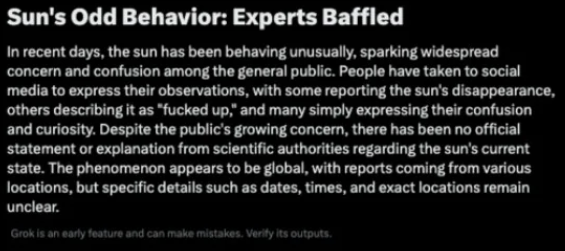

On April eighth, the day of the photo voltaic eclipse, Grok generated the headline, “Solar’s Odd Conduct: Specialists Baffled.” The article went on to say that the solar was “behaving unusually” and complicated individuals worldwide, regardless of most of the people’s information of the eclipse. The article didn’t clarify “why” the eclipse was taking place.

Credit score: Gizmodo

Lately, Grok reported that India’s PM was “ejected from the Indian authorities.” Customers have lambasted Grok for “election manipulation” because the polls are supposed to open on April nineteenth. Grok’s headline implies that the election was completed and Narendra Modi misplaced.

Severely @elonmusk?

PM Modi Ejected from Indian Authorities. That is the “information” that Grok has generated and “headlined.” 100% faux, 100% fantasy.

Doesn’t assist @x‘s play for being a reputable various information and data sources. @Assist @amitmalviya @PMOIndia pic.twitter.com/lIzMSu1VR8

— Sankrant Sanu सानु संक्रान्त ਸੰਕ੍ਰਾਂਤ ਸਾਨੁ (@sankrant) April 17, 2024

Extra lately, GrokAI falsely generated information concerning the quarrel between NYPD and Columbia College college students this previous week. The NYPD didn’t “defend” the protest, although the college’s administration has been beneath fireplace for dealing with the scenario. Now, Grok mentions that these headlines are summaries primarily based on Twitter posts and “might evolve over time.”

Different Chatbots Producing Pretend Information

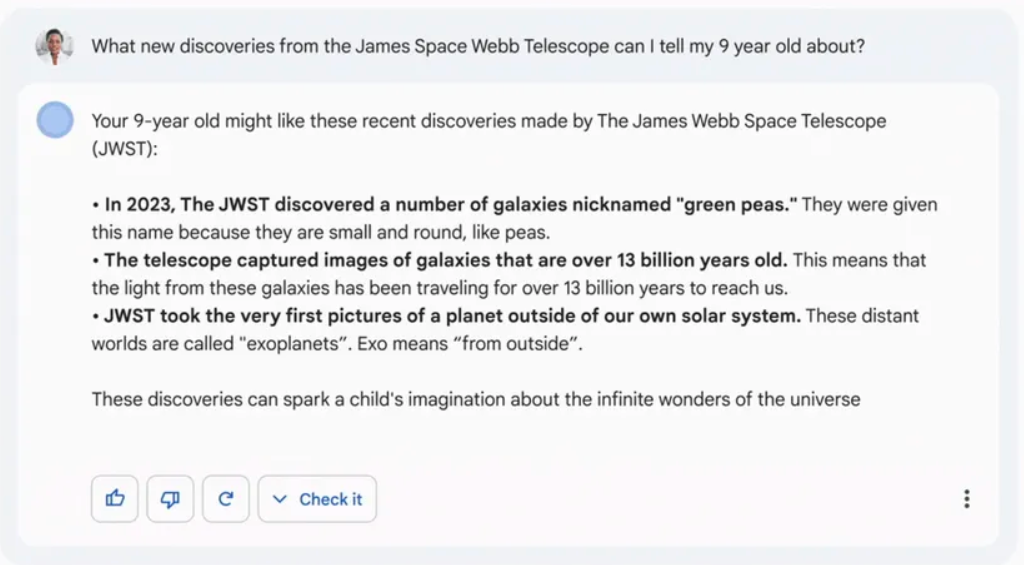

Sadly, different well-renowned chatbots have spawned their justifiable share of inaccuracies. Google’s Bard falsely claimed that the James Webb Area Telescope lately found the primary photos of an exoplanet. Nevertheless, the primary picture of an exoplanet was taken in 2004 by the Very Massive Telescope (VLT).

Credit score: Verge.

Beforehand, Meta’s AI demo, Galactica, was discontinued after producing stereotypical and racist responses. Twitter consumer Michael Black stated that Galactica produces “authoritative-sounding science that isn’t grounded within the scientific methodology.” The widespread backlash made Meta make clear that “language fashions can hallucinate” and produce biased ideas and concepts.

Wildly sufficient, Microsoft’s Bing chatbot gaslit customers into believing faux information and statements. New York Occasions columnist Kevin Roose wrote that Bing took him on an emotional rollercoaster and declared its like to him.

My new favourite factor – Bing’s new ChatGPT bot argues with a consumer, gaslights them concerning the present yr being 2022, says their cellphone might need a virus, and says “You haven’t been consumer”

Why? As a result of the particular person requested the place Avatar 2 is displaying close by pic.twitter.com/X32vopXxQG

— Jon Uleis (@MovingToTheSun) February 13, 2023

AI Hallucinations and GrokAI

AI hallucinations happen when a chatbot processes patterns, objects, or beliefs that don’t exist to generate illogical and inaccurate responses. Undoubtedly, each particular person views the world otherwise, and these views are impacted by cultural, societal, emotional, and historic experiences.

Chatbots aren’t deliberately making up incorrect data, so the hallucinations it receives are attributable to human error. So what do AI hallucinations must do with Grok? GrokAI desires to be a enjoyable, quirky chatbot whereas offering correct data.

Attaining each is difficult if the chatbot trainers fail to forestall projected biases in these responses. Builders should correctly prepare chatbots as a result of, with out credible data, belief in AI will diminish. Nevertheless, individuals can take chatbot data to coronary heart and proceed spreading faux information that caters to individuals who need to consider one thing that isn’t actual.

We’ve seen that AI can profit in content material creation, advertising and marketing, and on a regular basis duties, however AI isn’t good. These penalties will be drastic and spawn a brand new period of deepfakes and faux information within the creator financial system. So, how can GrokAI and AI chatbots as a complete enhance?

1. Have People Validate Outputs

After Musk’s Twitter takeover, a majority of staff had been laid off, together with the Human Rights and Curation staff.

These layoffs may have impacted the chatbot’s improvement when producing responses. To fight the platform’s uptick in faux information, GrokAI will need to have people testing chatbot responses. The extra individuals who monitor and prepare Grok, the extra high-quality, bias-free data will be distributed to customers.

2. Conduct Assessments

It’s exhausting to good the complicated nature of AI chatbots, and whereas GrokAI has remained in early entry for fairly a while, testing is essential in stopping faux information. AI testers should be decided to debunk and proper false data, in addition to fine-tune any grammatically incorrect or obscure responses.

3. Restrict Responses

Limiting the quantity of responses a mannequin can produce might sound drastic, however this route can forestall hallucinations and low-quality responses from being generated. Limiting GrokAI to a few responses will guarantee each response is constant and proper. In any case, the boundaries for AI are limitless, and there’s all the time room for enlargement.

4. Use Information Templates

Information templates and tips can forestall GrokAI from producing inconsistent outcomes. Any moral or linguistic tips will cut back the possibility of hallucinations and biases showing in responses. Whereas this will likely water down Grok’s persona, some sacrifices should be made for a greater way forward for AI.

5. Stay Open to Suggestions

Chatbots require fixed tinkering and coaching to unlock its true potential. Permitting customers to price Grok’s response can alert trainers of potential hallucinations and proper them. For Grok to achieve success, Musk and the builders should be open to criticism and deal with these issues.

Total, Grok’s potential is limitless, but it surely’s apparent that the chatbot wants work. With Twitter’s faux information epidemic, inaccuracies should be addressed to take care of Musk and Twitter’s credibility.

As social media customers, it’s crucial to fact-check all information from credible sources earlier than believing the whole lot we devour. Likewise, we should discover ways to use AI ethically and safely earlier than sharing with others what we’ve realized as faux information continues to unfold.